Advanced Techniques

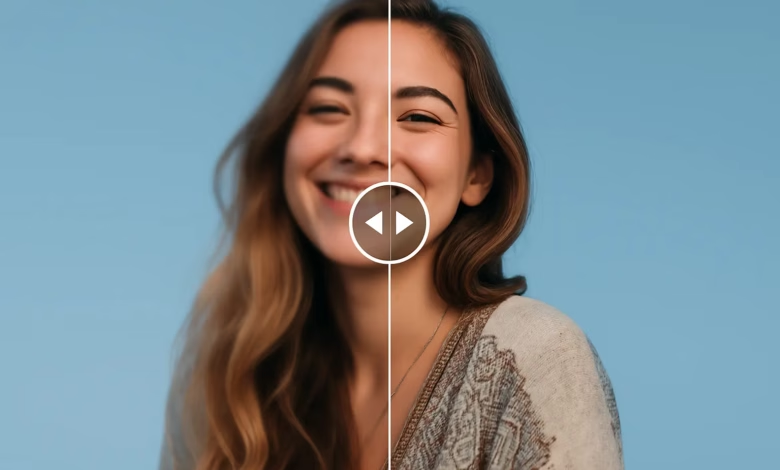

AI Image Quality Enhancement: The Best Free Tools for 2026

What is "Intelligent Restoration" for Photos and How Does It Change the Game?

In an era where images have become the primary means of expression, enhancing photo quality with artificial intelligence is no longer a luxury. It is no longer acceptable to capture a beautiful scene only to discover that the image is blurry or of low quality.

And because technology never ceases to surprise us, artificial intelligence has arrived to offer a magical solution, enabling us to improve image quality in a stunningly fast manner, even for those photos we had almost lost hope of fixing.

What is meant by AI image quality enhancement?

AI image quality enhancement is a process that uses deep learning systems to analyze and improve a photo by reconstructing its details with high precision.

These systems recognize elements within the image, such as faces, fabrics, and landscapes, and then redraw the missing details in a way that mimics what a professional camera would have captured. In simpler terms: it is an “intelligent restoration” process that makes the image look as if it were taken in the best lighting conditions and with the highest possible resolution.

How does this technology work?

The models used in AI image quality enhancement are trained on millions of real images, allowing them to learn the fine details that make a picture clear and natural.

When you upload a blurry or old photo to one of these tools, the AI analyzes it by comparing it to a massive database, and then reconstructs the lost features, edges, and shadows.

How can I help you further with this text? For example, we could:

- Find AI tools that can enhance image quality.

- Generate images based on a description.

- Write a blog post on a similar topic.

Among the most prominent things this technology can do are:

- Upscaling images without losing their original features.

- Noise Reduction resulting from poor lighting or old cameras.

- Correcting colors and contrast to make the image appear more vibrant and realistic.

- Restoring old or damaged photos by filling in missing parts and removing imperfections.

The Most Prominent AI-Based Image Enhancement Tools In recent years, dozens of tools have emerged that use artificial intelligence to enhance images, some professional and others free and easy to use. Among the most famous in 2026 are:

First: Free Websites to Enhance Images Directly from the Browser

These sites do not require downloading or registration. Just upload the image and let the artificial intelligence do the rest:

Let’s Enhance

- Feature: Upscales image resolution up to 4x and automatically improves colors and lighting.

- How to use: Upload the image – wait a few seconds – download the enhanced version.

Upscale.media

- Very simple and free.

- Instantly enhances old or low-resolution photos.

- Works from a phone or computer without needing to register an account.

- VanceAI Image Enhancer

- An intelligent tool that understands facial features and natural elements and redraws them with precision.

- Ideal for fixing personal photos or product images.

Second: The Easiest Mobile Apps for Beginners

If you prefer to do everything from your phone, these apps are excellent and user-friendly:

- Remini

- Pixlr

- PhotoRoom

Third: AI Tools within Famous Software

Even traditional software has begun to incorporate AI technologies:

- Photoshop AI Enhance: In the latest versions of Photoshop, you can upscale image resolution and improve lighting with a single click.

- Canva Magic Edit: Adds an “Enhance Image” option that intelligently and directly restores lighting and colors.

Quick Tip:

Do not overdo it with filters or enhancement tools, as some images can look “artificial” if excessively modified.

Use AI image quality enhancement only to restore balance and clarity, not to completely change the look of the photo.

Who are these tools for?

Anyone can benefit from AI image quality enhancement, whether you want to:

- Fix old family photos,

- Improve your commercial product images,

- Or prepare attractive posts for social media.

Artificial intelligence will do the job for you with incredible accuracy. In the past, enhancing photos was a task that took long hours in complex programs like “Photoshop.” Today, a few seconds and a single AI tool are enough to turn a dull photo into a perfect shot.